feature image via The Verge.

Two weeks ago, Microsoft debuted a Twitter bot modeled after a generic teenage girl. Her name was Taylor. Within twenty-four hours, the internet transformed Taylor into a Trump-voting, Hitler-loving racist that called people Daddy. Microsoft took her offline. In response to the debacle, I sat down with a three amazing bot creators, Darius Kazemi, Allison Parrish and Thrice Dotted. We talked about the implications of research, conversation, and pain with interactions via bots. As a researcher, part of my issue with Tay was the lack of awareness or programming around what conversations entail—from the painful to the joyful. What constitutes as funny for one can constitute as erasure for another. Our technology can amplify or silence voices, and bots are not outside of that scope. So how do we create things that are complex and full of a range of emotions and interactions but are not transphobic, misogynistic, racist and homophobic?

Is it possible? I think so.

A bit more about our bot creators:

Darius Kazemi is an internet artist under the moniker Tiny Subversions. His best known works are the Random Shopper (a program that bought him random stuff from Amazon each month) and Content, Forever (a tool to generate rambling thinkpieces of arbitrary length). He cofounded Feel Train, a creative technology cooperative.

Allison Parrish is a computer programmer, poet, educator and game designer who lives in Brooklyn. Allison’s recent book, @Everyword: The Book (Instar Books, 2015), collects every tweet from @everyword, a Twitter bot she made that tweeted every word in the English language over the course of seven years—attracting over 100,000 followers along the way.

Thrice Dotted is a Seattle-based language hacker and creator of several Twitter bots, such as @wikisext and @portmanteau_bot. They are a graduate student working on natural language processing, and definitely a cat person.

[Editor’s note: this interview was edited for grammar, length and flow. The editor also added context and contextual links where appropriate, so readers with varying levels of technical background can approach the piece with open hearts and smarter bots!]

Darius: Hello!

Allison Hi!

Thrice: Hi!

Caroline: So I’m excited to chat about bots for Autostraddle. I was wondering if y’all wanted to give a little background on yourselves and bots. What interests you about them?

Thrice: I created my first bot (@portmanteau_bot) in order to automate one of my arguably more annoying habits away—making up portmanteaus. I had no idea there was a thriving Twitter bot community until people I started saying nice things about the bot! Finding people who found these things interesting in turn made me more interested, and so it spun off from there. Also I work on natural language processing as a graduate student, and I really wanted to channel that knowledge into something more creative than quantitative.

Darius: I’ve made weird tech my whole life but I made my first Twitter bot in May 2012. I was actually pretty inspired a few years earlier by Allison’s short e-poetry projects, and also a book called Alien Phenomenology by Ian Bogost. The book talked about the virtues of building things over writing essays and I wanted to try my hand at making arguments by making objects. Bots were a natural way to do this since I was already on Twitter.

Allison: My interest in bots primarily comes from my interest in computational creativity—specifically, computer-generated poetry. I made @everyword in 2007, which I guess was my first “bot,” though @everyword and most of the other bots I make aren’t really interactive in any way, like many of the bots that have been in the news lately. I tend to think of bots more as a kind of digital graffiti or as a situationist-ey intervention in public space than anything else.

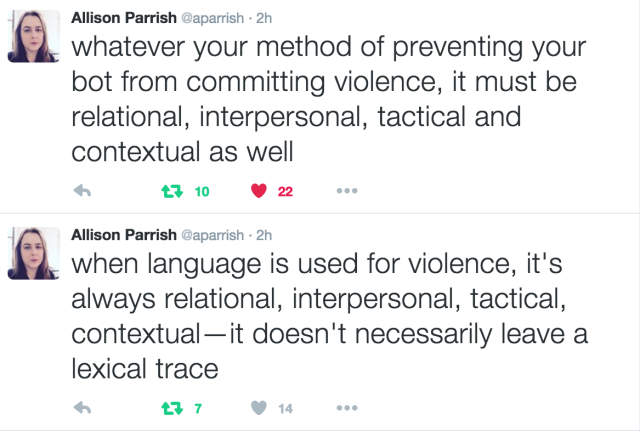

Caroline: Allison, you had this really great tweet about lexical constructs.

I was wondering if y’all wanted to expand into that. Do you think about the politics of language?

Allison: Ah, okay. Language has the capacity to cause harm, in a number of different ways. But when language is harmful, it’s because of the intention behind it. And intention doesn’t inhere in the text, not in a reliable and predictable way, at least. Insults, threats, intimidation—those all operate on a level below the actual surface lexical features of language. Unfortunately, the surface lexical features of language are really all that computer programs have when working with language—plus whatever other context can be turned into data to feed into the procedure. So you’re never going to be able to make a computer program that generates harmless language 100% of the time. What that means—and to your question—is that it’s impossible to make a bot with as wide a range of possibilities for interaction as tay has and expect it to just be “set it and forget it.”

Thrice: To add on to Allison’s point—we’ve hardly figured out how machines can infer semantics from natural language, and pragmatics is yet another leap beyond that.

Caroline: So to interaction, that’s what I found really fascinating about Tay on Twitter. Where a bot is placed and what that system looks like can create new and unintended interactions.

Allison: Like, I’d enjoy playing around Andrej Karpathy’s selfie evaluating code on its own, in an academic setting, but as soon as it’s in the public as “this will tell you whether or not you’re attractive,” it seems awful

Thrice: The miracle of machine learning is supposed to be that nobody has to hand-engineer these scripts anymore, but that doesn’t mean that it’s unnecessary to engineer the shape of the intended interactions.

Caroline: A bot in AIM or within a website is a lot different than a bot that exists in a multiple person platform, such as Slack or Twitter. And then the design of Twitter and Slack are so different. Those differences can be amplified by the bot, i.e. tagging people. That’s not even covering like word choices. Context—for what and where the bot is, is pretty key, especially for design.

Allison: Right! The problem with Tay isn’t that the output included bad words, it’s the way in which those words were displayed—the context of the medium and the interaction. What the speaker is and where the speaker is ARE key parts of the context of language. That’s one thing I think researchers in this field forget, that language isn’t just text. Whatever your corpus is, it’s just a tiny slice of the practice of the linguistic behavior that it was extracted from.

Darius: Imagine if Tay had included some kind of annotation: “btw, here’s how I learned to say this.” Where the end user doesn’t have to guess why the bot is doing what the bot is doing.

Allison: A+ to that suggestion, Darius.

Darius: This is kind of an aside because games. AI is a little different from academic and industrial AI, but there’s a relevant story here. When the game Half-Life came out in 1999, people were talking about how amazing the AI was. And in fact, the AI didn’t take any massive leaps. But when the enemy was making decisions, like to flank the player, the enemy would yell “I’m flanking the player!” Because players knew what the intent was of the AI, they had more context as to why it was running over to some corner of the room, and it seemed smarter. Even though AI had been doing that for a few years!

Me: For Tay, I’ve heard rumblings of like “BUT THE RESEARCH THAT WAS GENERATED.” And weighting research over the interaction, but what you suggested Darius sounds like much more interesting research, especially for users to participate within.

Thrice: That’s a super difficult problem in deep learning right now—the fact that what the algorithm is actually learning isn’t easily comprehensible to humans. Additionally, I found the research argument very lazy, because there is a large amount of hate speech on the internet. A bot didn’t need to tell us that. I’ll take this moment to mention FAT ML, which isn’t about text but is a workshop that’s thinking about these problems.

Allison: Beyond that, I mean, ethics in research is a Known Field Of Study And Concern, right? [The] idea that it’s okay to hurt someone in the pursuit of Science is pretty dangerous, and researchers in other fields have known this for a long time.

Caroline: Let’s talk about corpora used to train bots for a second. You can download available data from Reddit, and if you trained a bot from that entire corpus, not acknowledging or designing for it encompassing a wide range of boards, from incredibly racist ones to people talking about makeup, you will get a bot that covers that.While this is specific to Reddit, think about that corpus and how it was generated. Reddit has VERY particular rules and those rules are enforced in specific ways. What your corpus is, and where it came from, how it was created, generated, is often left out of the conversation. Like using Fitbit data in lieu of health data. Fitbit isn’t a great maker of ‘health’ at all, but ‘activeness.’ And those are different things.

Thrice: [For example,] Who cares if this model trained on news corpora is sexist due to the fact that 75% of the people discussed are men? It does a good job of predicting things on a held-out news corpus, which also contains these same biases.

Darius: Just to clarify, the “held-out” corpus Thrice mentioned is the sort of blind test that you use to see if your machine learning model worked. So like, you have a million news articles, you split it in two, train 500,000 articles, and then test on the other 500,000 articles

Allison: I have a weird analogy for this, which is that every data set has an emotional charge, whether that data set is a corpus of Shakespeare’s plays or live updates from Twitter or whatever. And when someone interacts with the output of working with that data, you’re passing that charge on to them. It’s your responsibility as a researcher or artist or whatever to recognize that charge and find ways to mitigate it, so that people can interact with your project in meaningful ways that don’t harm them.

[at the same moment]

Thrice: I would argue that no data set is neutral.

Allison: I’m having a hard time imagining what a neutral data set would even be? Jinx!

Thrice: Given that the whole point is to learn the informative biases, it has to be biased. The question is, what constitutes an informative bias versus noise, or a correlation that should not be in the model?

Allison: Though it should be noted that the reason to point out that “no data set is neutral” is not to say “never use data, you’ll just end up OFFENDING SOMEONE.” It’’s just that you need to take care and be vigilant, open-minded and gracious when people point out your mistakes.

Caroline: One could even argue the ability to use ‘dangerous’ or very very emotionally charged data, it’s just—think about how it’s articulated, massaged, or presented to others. Darius, I am super curious, could you talk about Two Headlines, [your comedy bot that mixes up two headlines to make them into one], and the design around that specifically. You ended up restructuring the bot and the data corpus?

Darius: Sure. The corpus of the bot has always been the same. I built it based on a little provocation from Allison in 2014. She sent me a link to someone telling a joke where they confuse two things that are in the news on a given day. The corpus has always been Google News, specifically whatever is on the front page of Google News right now in the various categories. It takes one headline, and the subject from another, and swaps the new subject into the headline. So “The Pope bestows sainthood on Mother Theresa” might become “The Pope bestows sainthood on Microsoft.” Generally this works well and I don’t even really have to filter the output because the corpus is all professional news sites. And the jokes work because news headlines are all written in a really recognizable style.

But like, news sites don’t use the N-word in headlines and all that, so I thought I wouldn’t need to really tweak it at all. Turns out that a minority of fans of the bot found a certain kind of headline really funny. Stuff like “Bruce Willis looks stunning in her red carpet dress.” I don’t find that funny. It’s a transphobic joke, based on the idea that a man in a dress is intrinsically hilarious.

So I figured out a way to filter that sort of thing. There’s a library called Global Name Data which provides the probability that a given first name is male/female. It’s not perfect, still based in gender binaries, but I like that it’s probabilistic. So it understands that “Casey” might be a man or a woman.

I took Global Name Data and made a library where you can give it a name and it gives you the probability of its gender. And then I just check my subjects to see if they’re flagged as opposite gender. If they’re opposite gender, I throw away the joke and make a new one. So it’ll say “Hillary Clinton talks about growing up as a little Palestinian girl” but not “Bruce Willis talks about growing up as a little Palestinian girl”.

I just don’t want these bots to tell jokes that I wouldn’t tell. I want my bots to make people happy

Allison: I am personally very grateful for the transphobic joke detection for Two Headlines, since it allows me to be in the audience of that bot when I wouldn’t otherwise be able.

Caroline: What’s different or fascinating about Two Headlines, Tay, and Everyword is that Everyword doesn’t respond to interactions but is tweeting singular words, Two Headlines is effectively a markov chain, a combo of two headlines to then create sentences, Tay was all around interactions. They all do different things.

Darius: Mark Sample talks about “closed” and “open” bots. Everyword is “closed,” it has exactly X number of things it can say. An “open” bot pulls in input from the wider world. Everyword was, in that sense, completely pre-programmed. I mean, I think it’s incumbent on anyone who makes anything to think about it really hard?

Me: Right, and what those artistic [and] engineering implications are on others.

Darius: I don’t understand why you wouldn’t. I literally just don’t understand it.

Me: In graduate school, I was in a design class taught by Clay Shirky, and every time we proposed something, he was like, “great, awesome, what does it do? Okay now what could possibly go wrong?” And we’d have to outline all the ways in which our thing could fail or be misused.

Allison: I think a question that sometimes doesn’t occur to people is “who gets to be in the audience of this thing I’m making?” If you don’t think about that question explicitly, then the answer will always be “people who are just like me.”

Darius: As engineers that’s what you’re trained to do for non-social outcomes! I went to engineering school and we had to consider everything that could go wrong in terms of like, “Will this work in cold weather? Will this work under considerable load?” But you’re never taught to think about the end user, typically. And especially not the people in proximity to the end user. But to me it’s the same attitude. Asking “what if a disabled person saw this? How would they feel?” is similar to asking “what if it were below freezing, would this device still work?”

Caroline: Totally that, that’s the way I design.

Allison: The thing is, I’m supposed to be in the audience of something like Tay. Like, because of my job and my arts practice, I’m expected to engage with and experience those kinds of things.

Thrice: There’s only so much that people can make work to begin with, so they hyperfocus on one problem (“let’s generate grammatical natural language!”) rather than the whole lot of very very real complex problems (“let’s generate language that isn’t horribly offensive!”).

Allison: And it kind of sucks to be in the position of wanting to interact with something out of interest and necessity, but then realize that the makers of the thing didn’t have me in mind when thinking about their audience.

Thrice: These issues are elided because the audience is often assumed to be just like the people who are making it.

Allison: Some people are expected to bear the extra burden of grounding the emotional charge of the source text, because the creators didn’t do a sufficient job of mitigating it on their end.

Interested in bots? Today, April 9th, is the third ever Bot Summit! Check it out! Darius is live streaming the event from the Victoria and Albert Museum. Additionally, Allison, Thrice, and Darius are in a show coming up at the And Festival.

Thanks for bringing all these bot creators together! It’s really interesting to hear this conversation.

I’m so glad you enjoyed it!

This was fascinating to read. Thank you!

I’m so glad you enjoyed it!

This was incredibly interesting. At first I didn’t know if I was “in the audience” because of my limited knowledge w/r/t bots and internet data sets (and algorithms? codes?), but answers to many of my questions were revealed as the article went on. Also, I referenced some of Clays Shirky’s research in my thesis about the role of technology (particularly in relation to women) in the 2009 Green Movement in Iran, so that was a pleasantly unexpected shoutout! I would love to be in a class with Shirky. Excellent conversation with an awesome panel–thanks Caroline and Autostraddle!

Great discussion! I would love to add all of you on linkedin :)

“Within twenty-four hours, the internet transformed Taylor into a Trump-voting, Hitler-loving racist…”

In other words, your average comment poster on many sites! =)

This is not something I ever would have thought I’d care about, but it was so interesting and I’m glad I read it. Thanks guys

anytime!

Excellent discussion, thanks for doing this everyone involved. I’ve never really gotten into roundtables before (cuz it usually feels like there’s a lot of people talking past one another), but I loved this one so much! :)

this was so interesting! very articulate and informed discussion of intersection between tech and social justice; ethics. thank you!

p.s. i don’t have a twitter bot but I did start a twitter account for my own enjoyment called compassion_bot a few years ago, kind of like webcomic humor for someone who can’t draw. it’s dumb but i like it; pretending to be a little compassionate robot with a Twitter account, interacting with humans even though it can’t feel compassion itself, with its little square-shaped robot heart.

When I told my Buddhist friend about it, he assumed it was automated (a real twitter bot), ‘reciting’ mantras or prayers by tweeting them, similar to Tibetan monk practice. When monks do this, it’s to build up good mental and emotional habits (mindfulness, compassion). I guess if a twitterbot does it, or a human with a twitter account, it could help add to positive merit in the world. Different than what the round-table was discussing, but some interesting connections too. I wonder if there is an actual Twitterbot that does this already?

like a tech version of prayer wheels, I meant to say.

This is fascinating, thanks!

Ah! This is awesome! I had heard of (and love the work of) Allison Parrish before! I have a couple bots I want to make at some point. :)

i’m so glad yall enjoyed this!

This was such a great and informing article! A lot of designers who make things with technology (i.e. robots, apps etc) think their work is neutral. This again shows it is not. Thanks again for this piece!